TLSv1.2 Record Layer: Alert (Level: Fatal, Description: Internal Error)

Hi,

Nginx is running on CentOS as a reverse proxy with a public cert. When devices connect to the service they fail with the following errors.

RC:-500 MGMT_SSL:tera_mgmt_ssl_open_connection: SSL V3 cannot be set as min SSL protocol version. Ignoring.

RC:-500 MSS:(CERT_checkCertificateIssuer:1289) CERT_checkCertificateIssuerAux() failed: -7608

RC:-500 MSS:(CERT_validateCertificate:4038) CERT_checkCertificateIssuer() failed: -7608

RC:-7608 MGMT_SSL:tera_mgmt_ssl_open_connection: SSL_negotiateConnection() failed: Unknown Error

RC:-500 WEBSOCKET:tera_mgmt_ssl_open_connection failed (ssl_session_id: 4)

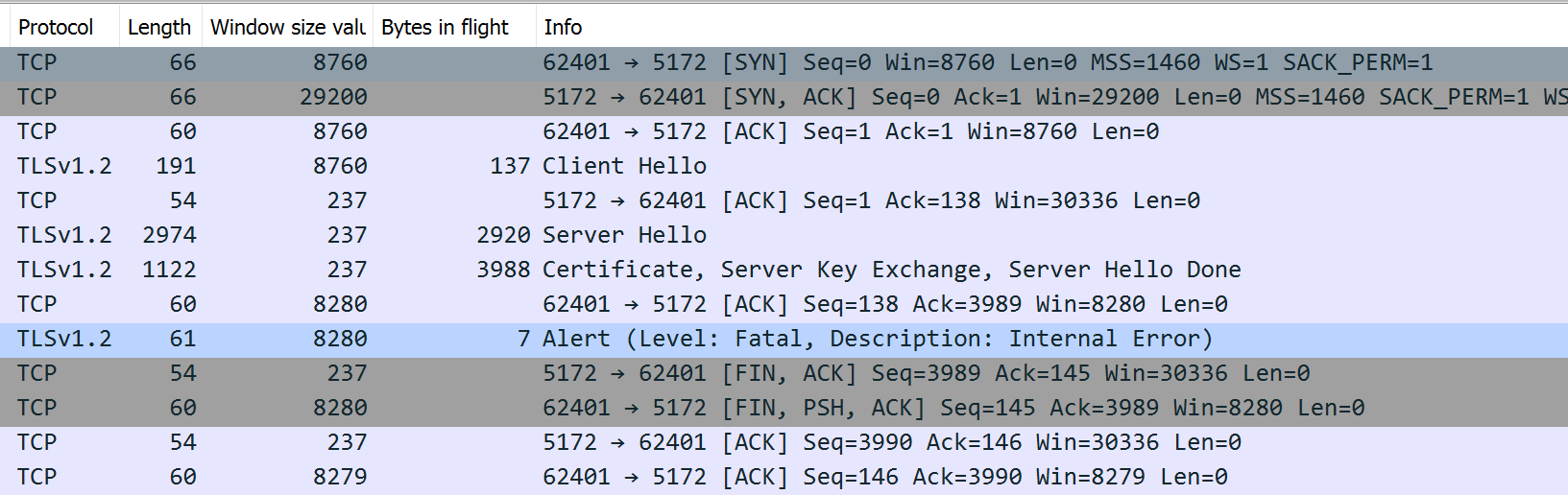

Software vendor was unable to help, so we turned to wireshark.

Looks like we are breaking right at the certificate key exchange

Google shows several posts with the same issue, however no solution is offered. TLSv1.2 Record Layer: Alert (Level: Fatal, Description: Internal Error)

Any suggestions on what to check are greatly appreciated

Content Type: Alert (21)

Level: Fatal (2)

Description: Internal Error (80)

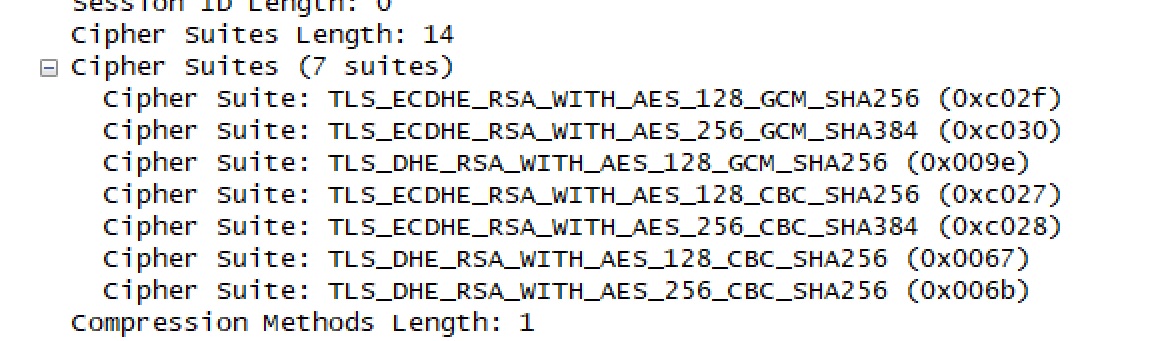

ssl_ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-CBC-SHA256:ECDHE-RSA-AES256-CBC-SHA384:DHE-RSA-AES128-CBC-SHA256:DHE-RSA-AES256-CBC-SHA256;

Client shows the following ciphers in the Hello

Server offers: TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 (0xc02f)

As is often the case, troubleshooting by screenshot of a a few columns from a capture is a frustrating exercise. Can you please provide the capture?

If not, who is providing the Fatal alert, client or server? If the client I suspect there's something it doesn't like about the server certificate.

Sorry, yes we tried to sanitize with TraceWrangler but the output file becomes useless after sanitizing.

The fatal alert is from the Client and we were capturing on the server side. Wildcard certificate from GoDaddy is being used.

I think you'll have to debug the client, is it a browser? If so have you tried another? Can you use

openssl s_client ...to make a debuggable connection?no, the client is a teradici zero trying to establish a connection to it's management console over 5172.

if we try to access the url in ANY browser, we aren't able to reproduce the fatal alert. Chrome browser offers 17 cipher suites and agrees to use TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 (0xc02f) sent in the server Hello

http://www.teradici.com/web-help/TER1...

Looks like an issue in the client then.

Thanks for the openssl tip.

Now I am wondering if it's an Nginx misconfigurataion or as you said the problem with the zero not sending the Client Key Exchange, Change Cipher Spec Encrypted Handshake in the next expected packet for some reason?

https://stackoverflow.com/questions/3...

Personally I still suspect the client, as everything else is happy with the server config and the client sends the alert immediately after getting the cert.

The client was breaking because the certificates were incorrectly placed in the crt file. The Root cert was inserted before the intermediary, when it should have been the last.

Interesting result, RFC 5246 (for TLS 1.2 which you seem to be using) says this about the list of certificates:

So it appears that the Zero client was the only one that actually required the certs in the order specified in the RFC. From the RFC it appears that the root certificate can be omitted from the list rendering the order mute.