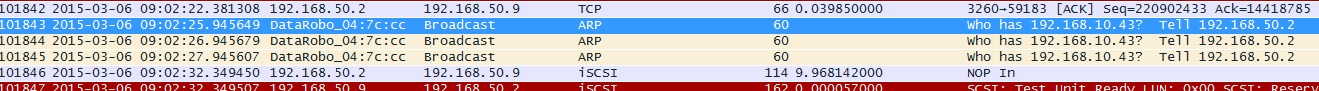

Is there any logic to why an ARP packet would break an iSCSI stream? There is a Drobo storage device (3 iscsi ports 1 managment) directly connected (no switch) to a dedicated iscsi nic of an esxi server. Esx host is reporting a connection loss / restore on the iscsi connection while data is being transferred over the interface. Tcpdump-uw at the host reveals a 9.9 sec delay (tcp.time_delta) right after ARP broadcast message. 192.168.50.2/24 - is the IP of the iscsi interface on the DROBO (no option to set DNS or DG, which you would not need on iscsi interface) 192.168.50.9/24 - is the IP of the iscsi NIC on the esxi host. 192.168.10.43 is the IP of the VM that has management software for DROBO.

Relevant portion of the capture https://www.cloudshark.org/captures/6b1d422de853 Thank you. asked 06 Mar '15, 15:12 net_tech edited 07 Mar '15, 06:58 |

2 Answers:

no, unless there is a catastrophic bug in the TCP/IP stack of the involved systems.

That's (most certainly) just be a coincidence. Regards answered 07 Mar '15, 13:30 Kurt Knochner ♦ |

The ~10sec delays in your capture file both have a "NOP-In" command (NOP stands for No-operation). So this looks like your iSCSI connection is Idle and the iSCSI target is checking whether the connection is still fine. The ARPs are a different story. They should be seen on this interface, as the subnet is 192.168.50.0/24. Are you sure you set the subnetmask correctly on the interface of the DROBO? And what is the IP address on the management interface of the DROBO? If the DROBO needs to communicate to the management VM on the 192.168.50.0/24 interface, you will have to configure the default gateway so that it can reach the management VM. answered 08 Mar '15, 04:07 SYN-bit ♦♦ Just to confirm, NOP-in is a response to NOP-Out message? Looking at the trace, it appears as NOP-Out is a response to NOP-in. 192.168.10.16/24 is the management interface of the DROBO with DG being set to 192.168.10.2. There should be no management traffic going over iSCSI port 192.168.50.2/24 When default gateway is configured on the management NIC of the DROBO, ARP packets spill in to the iSCSI ports, with DG removed from the management port ARPs are not seen on the iSCSI ports. The Default NoopTimeout is 10 sec and is the amount of time, in seconds, that can lapse before the host receives a NOP-In message. The message is sent by the iSCSI target in response to the NOP-Out request. When the NoopTimeout limit is exceeded, the initiator terminates the current session and starts a new one. Which is what I am seeing as a disconnect. (09 Mar '15, 12:35) net_tech |

Thanks Kurt.

So if ARP is out of the picture, could the problem have something to do with MTUs?

Saw a note on this KB stating to set the MTUs on the switch higher. (no explanation provided). http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1028584

MTU on the storage device and MTU on the iSCSI port are currently set to 4500

well, yes and no. If you set a large MTU but don't enable Jumbo Frame support on the switches, there will be problems. However in that case you would experience severe problems AND you mentioned that there is no switch involved. So, what's left is a potential problem with Jumbo Frame support within one of the involved NIC drivers. However, your description sounds more like: It works for some time, then there is a problem. I don't think this is caused by ARP or Jumbo Frames.

So, the problem might be at a totally different layer. I see at least the following possibilities:

Kurt,

I found the following message in the host log "committing txn callerID: 0xc1d0000f to slot 0: IO was aborted by VMFS via a virt-reset on the device" which explains the 10 sec delay as the storage was issued a reset command.

This NAS has 3 iSCSI ports, but only 1 port is connected and configured.

If a file is READ or WRITTEN to the volume of the NAS using vshpere client, no resets / disconnects are being reported, however if you start a VM that lives on that data store, storage disconnects are reported by the host.

This blog post is showing the same iSCSI errors as we see on our host and brings up two reasons:

http://blogs.vmware.com/kb/2012/04/storage-performance-and-timeout-issues.html

Thank you