TCP is limiting the use of bandwidth

I am trying to determine why a Windows 2008 R2 server is throttling the bandwidth for file transfers. I am trying to send files from the USA to Malaysia. We have a 50 Mbs MPLS circuit that typically only shows about 30%-35% usage. When I send a file from server A, in the US, to server B, in Malaysia, I get a transfer speed of about 130kb-300kb/sec. However, if I send multiple files simultaneously they will all go at same speed, until I am consuming about 3,500 - 4,000 Mbs.

Server A is Windows 2012 R2, Server B is Windows 2008 R2.

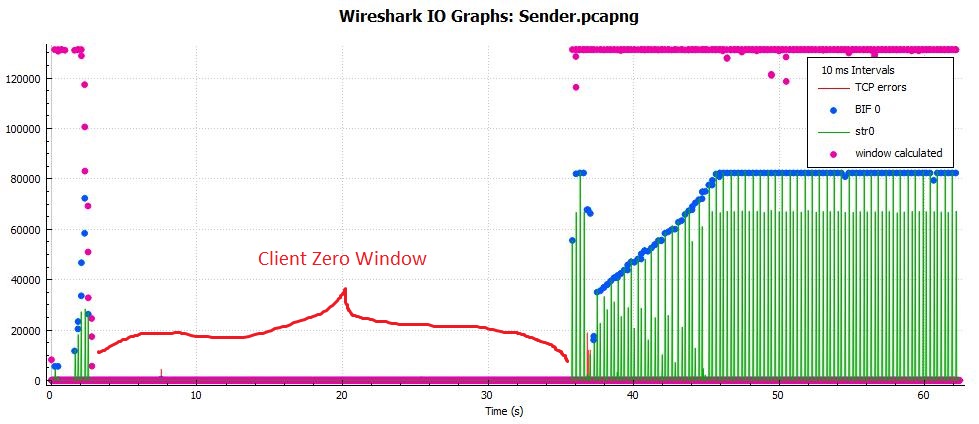

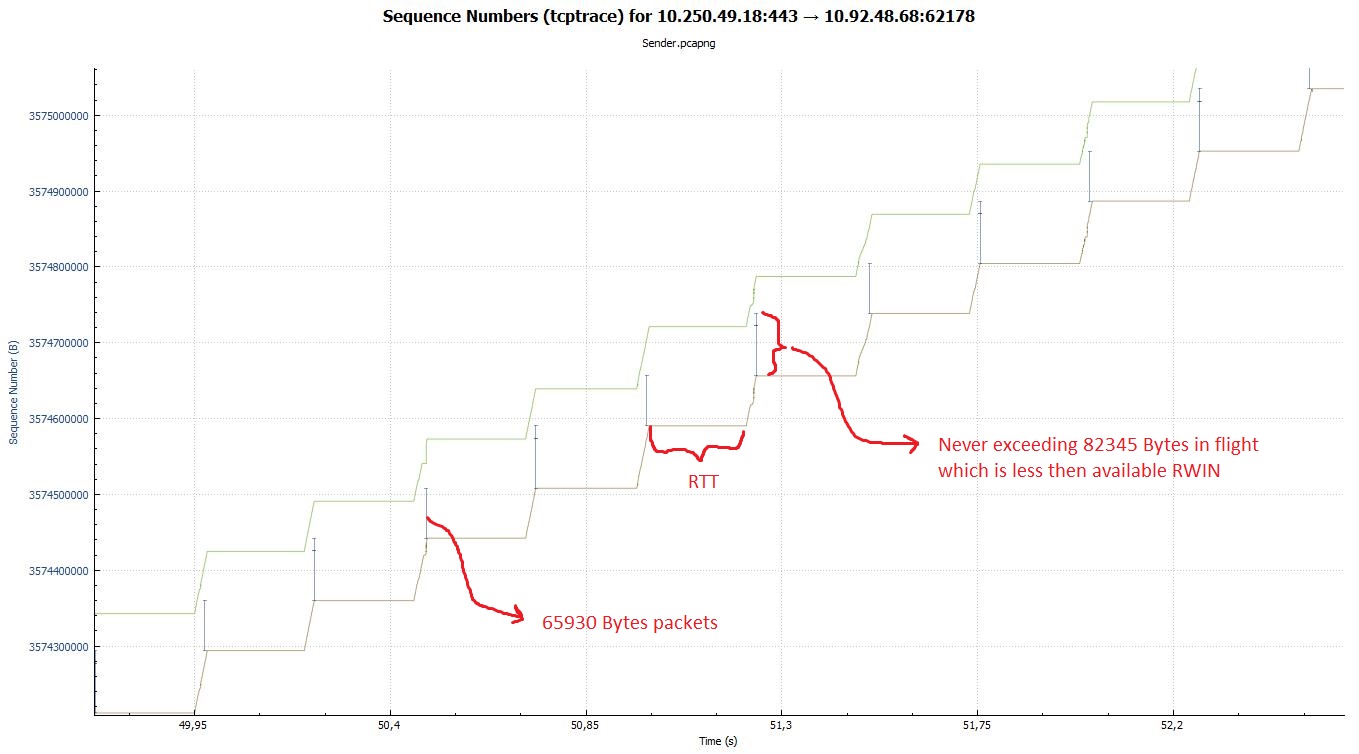

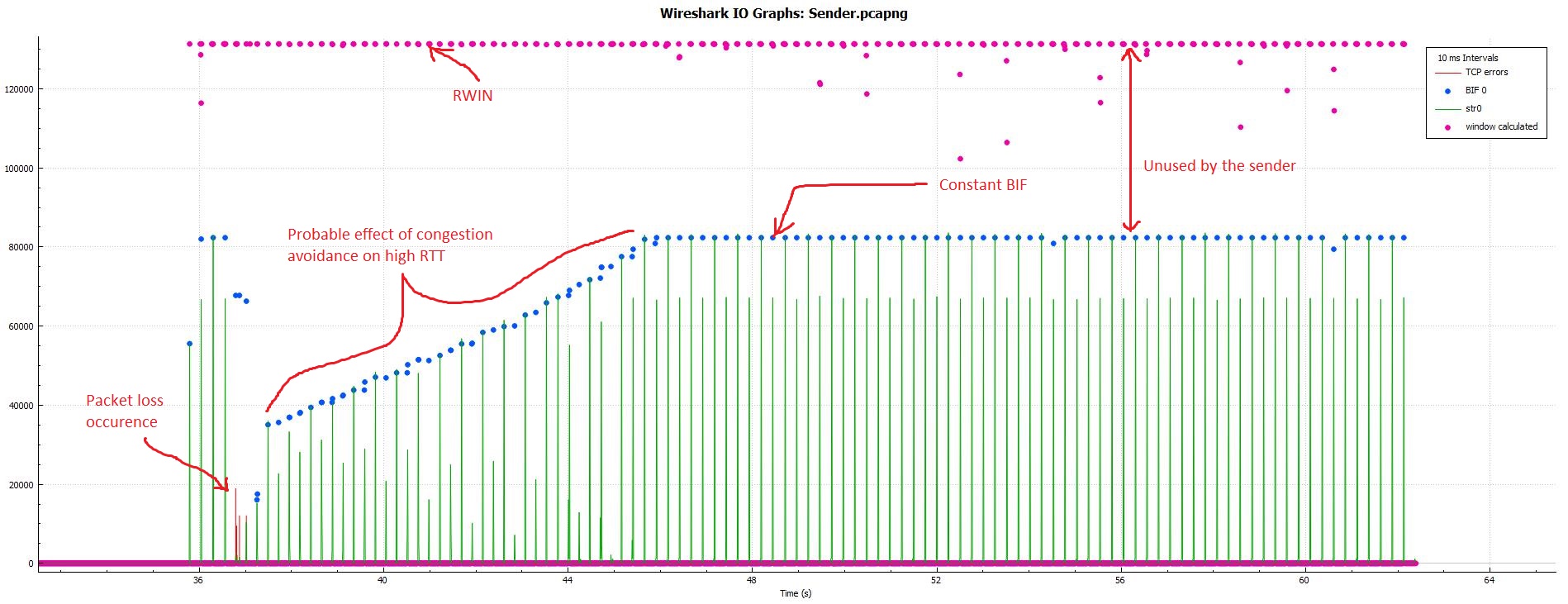

Using Wireshark, I can see that the initial handshake started with a window size of 8192 and a scaling factor of 256, and quickly negotiated it down to a widow size of 513, and then it never has more than 82k bytes in flight. I am trying to determine what, in the settings on the two servers, is driving this behavior, and if I can adjust it so a single file transfer uses closer to the total available bandwidth.

Hello,

Doing such kind of analysis (TCP performance issues) demands looking at PCAPs, highly preferably sender-side ones. Could you please share them if possible?

https://www.dropbox.com/s/wizk8jjx265... Here you go. I started a transfer, and recorded it on both ends. I let it run long enough to settle into a steady state, and then cancelled it.