Your throughput limitation isn’t related to Receive Window sizes (or network bandwidth limits). It is entirely limited by the rate that the sending server is delivering data to the network. It would appear to be a send buffer limitation (but could be FTP delivering data to TCP in the server) with a buffer size of 128 KB.

There are never more that 128 KB of bytes in flight and data is sent in blocks of 64 KB. So there are never more than 2 x 64 KB “blocks” in flight. A new 64 KB “block” cannot begin to be transmitted until the whole 64 KB (from two bursts ago) is acknowledged.

Unfortunately, due to capturing inside the server, we are seeing packets that appear to be very large – but would be broken up into normal sized packets after we captured them – and as they leave the server.

The bursts of 64 KB always appear as one very large 63,648 byte packet followed by a smaller 1888 byte packet. The agreed MSS is 1260 and so in real life, our 64 KB burst would appear on the wire as 52 or 53 normal sized packets. The last packet in the burst has a PUSH flag. The sender has to wait for the ACK to that last packet before it starts a new 64 KB block.

You need to check your server configuration for anything that would limit the output to 128 KB per RTT. Possible culprits could be send buffer space limits in TCP, a setting in the FTP server or something else.

At the network or client side, you should investigate the “extra 45 ms” that will make more sense when you see the charts below.

It would be good to see a simultaneous capture from both the sender and receiver.

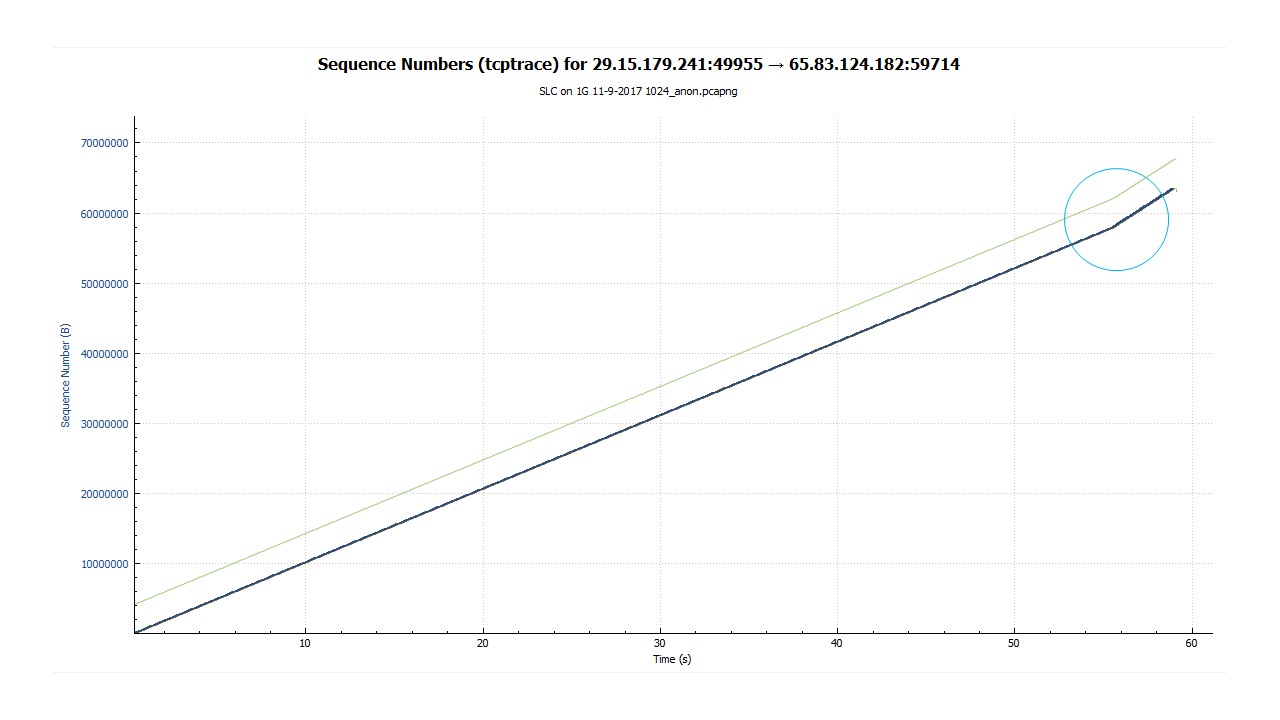

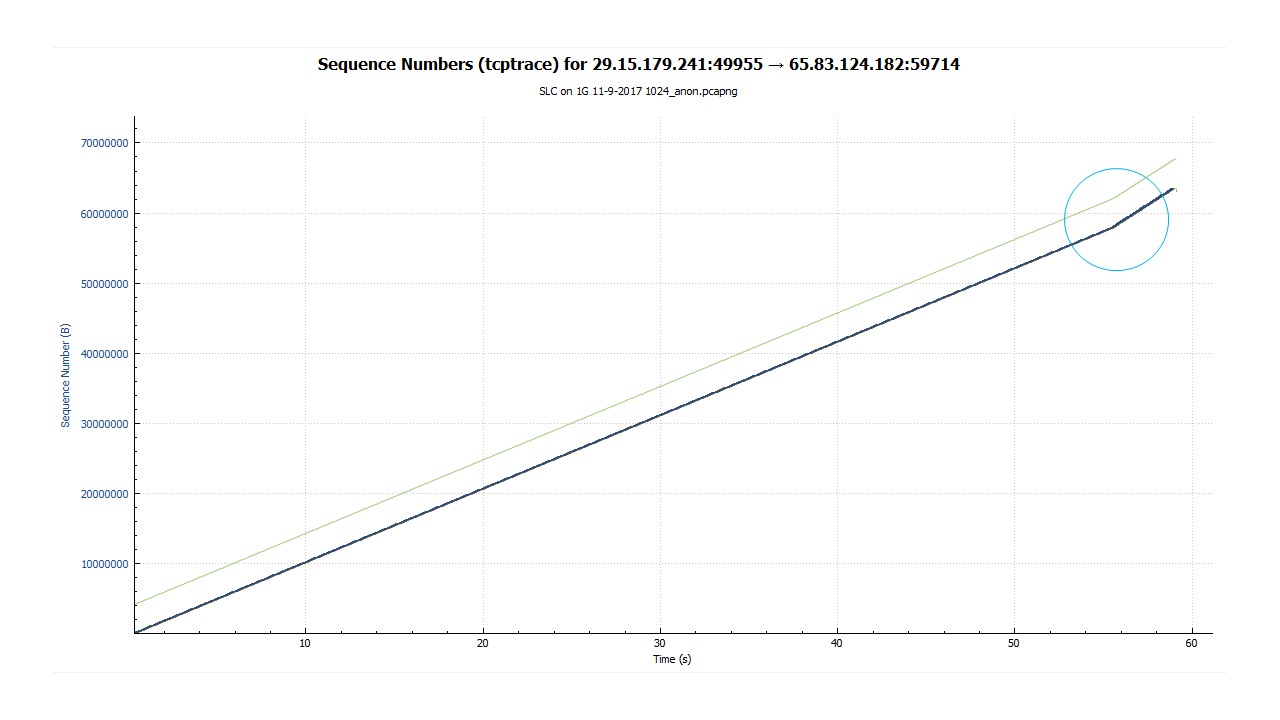

Some TCP-Trace charts tell the story.

The flow rate (slope of the packets) is very consistent for most of the flow, but we do see an increase just before the end. Something changes in the blue circle.

In the consistent part of the early flow, there are 2 x 64 KB bursts every 125 ms. The minimum RTT is 76 ms and we see the ACK for the first packet in the burst arrive after 76 ms. The ACK for the second-last packet in the burst arrives after 80 ms.

We can deduce that if we had a packet capture taken at the receiver, the stack of real-life normal sized packets would look similar to the ACK line in the blue circled area. That is, the last packet would be 4 ms behind the first. Based on the rate we see ACKs, it would appear that there is an available bandwidth of somewhere around 100 Mbps. �

The most important piece of information on this chart is that the ACK for the very last packet in the 64 KB burst is always an extra 45 ms later than we might expect.

The red circles show the ACK line ... (more)

Can you share us a trace?

How to share an trace in a secure way?

HI. Here it is : https://www.dropbox.com/s/kx6dyt9bf3p...

this was captured from closest to server interface

so the TCP window size stays same from packet 301 (4194304) - all the way almost to the end. the customer says it be due to some network bandwidth buffers... how can i refute this based on capture info?

from what i understand, there are only 2 factors - bandwidth and latency to check (i mean besides retransmits - which are not present) latency - RTT 0.076 s how do we check if bandwidth can be the problem here?

maybe i should rephrase my question - what determines the final windows scaling factor? example FTP client initiates a TCP connection and has a 1GIG interface. Window scaling is enabled.

RTT is 76ms in the original SYN packet it had: MSS is 1460 window size value: 65535 window scale: 7 (multiply by 128)

SYN-ACK from opposite side: MSS is 1260 window size value: 8192 window scale: 14 (multiply by 16384)

in final ACK it came to: Window size value: 32768 window size scaling factor: 128 calculated window size: 4194304

(for the rest of FTP transfer it stayed on this value: 4194304)

so question regarding initiating side (FTP client):

1)How at the end the window size reduced to 32768 on the initiating side? 2) Why if the interface is set to GIG - it did not use the 14 as window scale ? but used 7?what could have determined that - is it ...(more)